May 20th, 2012 • 3 Comments

In previous blogposts I already demoed what a Python based DTrace consumer can be used for: live inspection (callgraphs) of running programs, nice Visualizations or just plain tracing. Especially with SmartOS (as one of the many platforms which have DTrace support)I found it a bit annoying to deal with DTrace. Since SmartOS is headless itself I was thinking about creating a web based editor for DTrace scripts which would than create nice visualizations of the aggregated data. This simple IDE is written as an Django application and makes use of the Python based DTrace consumer.

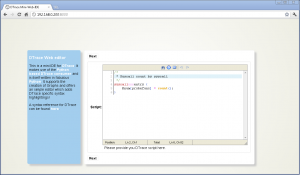

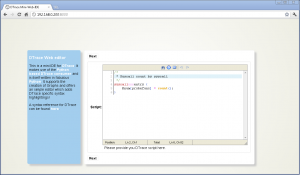

So here it is a first shot of the DTrace web based Mini-IDE (couldn’t come up with a better name :-))

web based DTrace IDE (Click to enlarge)

As you can see it is running inside of Chrome on a Windows box – just to make sure you believe it is indeed web based. Now let’s take a look at all the features:

Syntax highlighting & Error detection

The user is guide through 3 steps – Writing the DTrace script, running it and then the output is shown. The editor in the first step features a DTrace specific syntax highlighting. Variables, Build-in variables, functions, aggregation functions and a set of providers are highlighted accordingly. Comments are also parsed in a certain color.

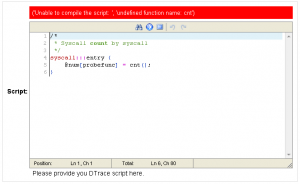

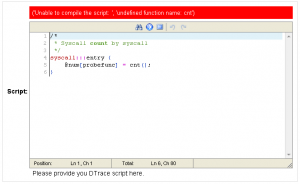

Next to this the editor will try to compile the script and show possible errors. The following screen-shot shows that when an unknown aggregation function is used the error is reported. It will use the Python binding for libdtrace to compile the script and return any errors:

Wrong aggregation function name (Click to enlarge)

Running DTrace

When clicking next to reach the next step a set of options are shown. The user can insert the time in seconds which DTrace should aggregate data (In this case 2 – or 0 when continuously aggregate data) and check if a chart should be generated from the aggregated data:

Options for running DTrace (Click to enlarge)

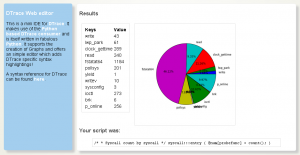

Displaying the result

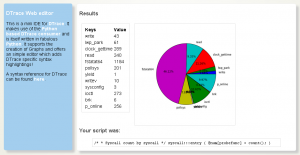

When done the aggregated Data will be displayed. E.g. when you used the following one liner (syscall count by syscall):

syscall:::entry {

@num[probefunc] = count();

}

The result is displayed as a pie chart:

(Click to enlarge)

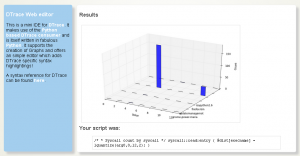

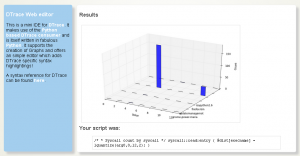

Or to give another example (A read distribution):

syscall::read:entry {

@dist[execname] = lquantize(arg0, 0, 12, 2);

}

The result would be a bit different since the aggregation function lquantize is used. The data is displayed from 0 to 12 in steps of 2. The Z-Axis shows the name of the executable:

(Click to enlarge)

Categories: Personal • Tags: Analytics, DTrace, Python • Permalink for this article

April 1st, 2012 • Comments Off on Python DTrace consumer and AMQP

This blog post will lead through an very simple example to show how you can use the Python DTrace consumer and AMQP.

The scenario for this example is pretty simple – let’s say you want to trace data on one machine and display it on another. Still the data should be up to date – so basically whenever a DTrace probe fires the data should be transmitted to the other hosts. This can be done with AMQP.

The examples here assume you have a RabbitMQ server running and have pika installed.

Within this example two Python scripts are involved. One for sending data (send.py) and one for receiving (receive.py). The send.py script will launch the DTrace consumer and gather data for 5 seconds:

thr = dtrace.DTraceConsumerThread(SCRIPT, walk_func=walk)

thr.start()

time.sleep(5)

thr.stop()

thr.join()

The DTrace consumer is given an callback function which will be called whenever the DTrace probes fire. Within this callback we are going to create a message ad pass it on to the AMQP broker:

def walk(id, key, value):

channel.basic_publish(exchange='', routing_key='dtrace', body=str({key[0]: value}))

The channel has previously been initialized (See this tutorial on more details). Now AMQP messages are passed around with up-to-date information from DTrace. All that there is left is implementing a ‘receiver’ in receive.py. This is again straight forward and also works using a callback function:

def callback(ch, method, properties, body):

print 'Received: ', data

if __name__ == '__main__':

channel.basic_consume(callback, queue='dtrace', no_ack=True)

try:

channel.start_consuming()

except KeyboardInterrupt:

connection.close()

Start the receive.py Python script first. Than start the send.py Python script. You can even start multiple send.py scripts on multiple hosts to get an overall view of the system calls made by processes on all machines 🙂

The send.py script counts system calls and will send AMQP messages as new data arrives. You will see in the output of the receive.py script that data arrives almost instantly:

$ ./receive.py

Received: python 264

Received: wnck-applet 5

Received: metacity 6

Received: gnome-panel 15

[...]

Now you can build life updating visualizations of the data gathered by DTrace.

Categories: Personal • Tags: Analytics, DTrace, Python • Permalink for this article

March 29th, 2012 • Comments Off on Python DTrace consumer meets the web

I had look at my Python DTrace consumer yesterday night and realized it need a bit an overhaul. I already demoed that you can make some visualization with it – like life updating callgraphs etc. Still it missed some basic functionality. For example I did only support some DTrace aggregation actions like sum, min, max and count. Now I added support for avg, quantize and lquantize.

Now I just needed to write about 50 LOC to do something nice 🙂 Those ~50 lines are the implementation of an WSGI app using Mako as a template engine. Embedded in the Mako templates are Google Charts. And those charts actually show information coming out of the Python consumer. Now all what is left, is to point my browser to my SmartOS machine and get up-to-date graphs! For example a piechart which shows system calls by process:

Click to enlarge

Or using quantize I can browse a nice read size distribution – aka: how much bytes do my processes usual read?:

Click to enlarge

With all this it is also possible to plot graphs on the latency of node.js apps :-):

Click to enlarge

Again documentation on writing DTrace consumers is almost non-existent. But with some ‘inspiration’ from Bryan Cantrill and the original C based consumer I was able to get it work.

Categories: Personal • Tags: Analytics, DTrace, Python, SmartOS • Permalink for this article

March 23rd, 2012 • Comments Off on Python DTrace consumer on SmartOS

As mentioned in previous blog posts (1 2 3) I wrote a Python DTrace consumer a while ago. The cool thing is that you can now trace Python (as provider) and consumer the ‘aggregate’ in Python (as consumer) as well :-). Some screen-shots and suggestions what you can do with it are described on the github page.

I did not have much spare time lately but I got the a chance last night to test my Python based DTrace consumer on SmartOS, Solaris 11 and OpenIndiana – and can confirm that it runs on all 3.

To get it up and running on SmartOS you will first need to install some dependencies. Use the 3rd party repositories as described in the SmartOS wiki to get pkg up and running:

pkg install git gcc-44 setuptools-26 gnu-binutils

When that is done we will clone the consumer code and install cython (you could however also use ctypes) using pip:

easy_install pip

pip install cython

git clone git://github.com/tmetsch/python-dtrace.git

cd python-dtrace/

python setup.py install

Now since this is done we can do the obligatory ‘Hello World’ to get things going:

Python DTrace consumer on SmartOS (Click to enlarge)

For more examples refer to the examples folder within the python-dtrace repository.

Categories: Personal • Tags: DTrace, Python, SmartOS • Permalink for this article

February 28th, 2012 • Comments Off on SmartStack = SmartOS + OpenStack (Part 3)

This is the third part os the blog post series. Previous parts can be found here: 1 2

To ensure the proper startup of nova-compute we will register it using SMF – this will also increase the dependability of the services. To start we will define a properties file for nova-compute – we will be using a glance and rabbitMQ host which run on a different host:

--connection_type=fake

--glance_api_servers=192.168.56.101:9292

--rabbit_host=192.168.56.101

--rabbit_password=foobar

--sql_connection=mysql://root:foobar@192.168.56.101/nova

We will store this contents in the file /data/workspace/smartos.cfg. We will also create an XML file with the manifest definition – it has some dependencies defined:

<?xml version='1.0'?>

<!DOCTYPE service_bundle SYSTEM '/usr/share/lib/xml/dtd/service_bundle.dtd.1'>

<service_bundle type='manifest' name='openstack'>

<service name='openstack/nova/compute' type='service' version='0'>

<create_default_instance enabled='true'/>

<single_instance/>

<dependency name='fs' grouping='require_all' restart_on='none' type='service'>

<service_fmri value='svc:/system/filesystem/local'/>

</dependency>

<dependency name='net' grouping='require_all' restart_on='none' type='service'>

<service_fmri value='svc:/network/physical:default'/>

</dependency>

<dependency name='zones' grouping='require_all' restart_on='none' type='service'>

<service_fmri value='svc:/system/zones:default'/>

</dependency>

<exec_method name='start' type='method' exec='/usr/bin/nohup /data/workspace/nova/bin/nova-compute --flagfile=/data/workspace/smartos.cfg &' timeout_seconds='60'>

<method_context>

<method_environment>

<envvar name="PATH" value="/ec/bin/:$PATH"/>

</method_environment>

</method_context>

</exec_method>

<exec_method name='stop' type='method' exec=':kill' timeout_seconds='60'>

<method_context/>

</exec_method>

<stability value='Unstable' />

</service>

</service_bundle>

This can be imported using the svccfg command. After doing so we can use al the known commands to verify all is up and running:

[root@08-00-27-e3-a9-19 /data/workspace]# svcs -p openstack/nova/compute

STATE STIME FMRI

online 14:04:52 svc:/openstack/nova/compute:default

14:04:51 3142 python

Now up to integrate vmadm…

Categories: Personal • Tags: Cloud, SmartOS • Permalink for this article